- An Azure Index DataSource with a soft delete option

- A function to run the Indexer

- A function to permanently delete the soft-deleted blobs

IMPLEMENTING A SOFT DELETE POLICY IN THE AZURE SEARCH DATA SOURCE

The following code shows how to create or update the Azure Search Index data source to use a soft delete policy:

private static DataSource CreateBlobDataSource()

{

DataSource datasource = DataSource.AzureBlobStorage(

name: "azure-blob",

storageConnectionString: AzureBlobConnectionString,

containerName: "search-data");

datasource.DataDeletionDetectionPolicy = new SoftDeleteColumnDeletionDetectionPolicy("IsDeleted", true);

return datasource;

}

view rawblobdatasourcewithsoftdelete.cs hosted with

The soft delete makes use of a blob metadata property: IsDeleted. This document goes into more detail on Blob Indexing and Soft Deletes. When our application or process needs to remove a blob from Azure Search, we first need to update the blob metadata property (IsDeleted) and set it’s value to true. Below I have attached some sample code that does exactly this:

var sa = CloudStorageAccount.Parse(conString);

var blobClient = sa.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("<yourcontainername>");

var blob = container.GetBlockBlobReference("<yourblobname>");

blob.Metadata.Add("IsDeleted", "false");

view rawsetblobmetatagvalue.cs hosted with

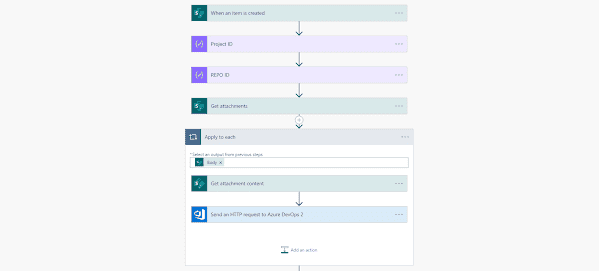

These 2 code snippets take care of the delete policy and the code to set the metatag property, necessary, we can now create the Function to run our Indexer and delete unwanted blobs. At this point it’s important to note that there are different ways to solve the problem:

a) We could implement everything within one Function. However, this does not scale as well, especially if we have lots of blobs to remove.

B) Use a number of Functions that work together to orchestrate the solution:

- 1 Function to run the Indexer and update the Azure Search Index

- 1 Function to retrieve a list of all blobs to be deleted and put them in a queue

- 1 Function that deletes the blobs using a queue trigger. This one will fan-out depending on the queue size.

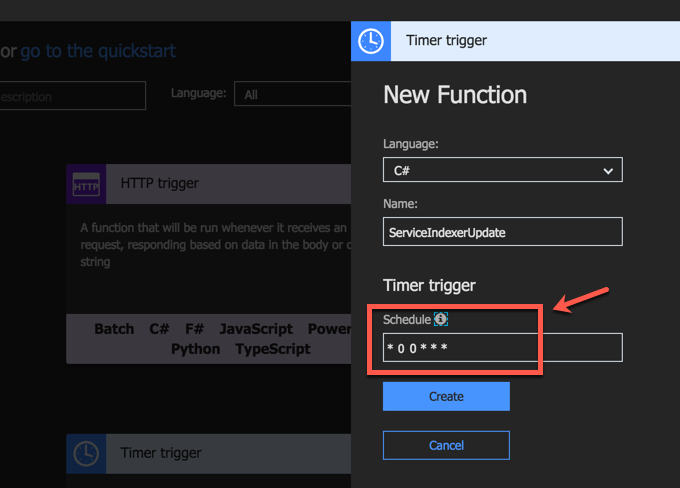

A FUNCTION TO RUN THE AZURE SEARCH INDEXER

Although this operation doesn’t require a Function to run, since indexers have their own schedulers, implementing it through a function means that we can orchestrate the whole process to run in unison.

First, we need to create the Function to run on a schedule defined as a the Cron job. I’ve configured the schedule to run every night at midnight:

The Function code that runs the indexer is shown below:

using System;

using System.Configuration;

using System.Net.Http;

public static async Task Run(TimerInfo myTimer, TraceWriter log)

{

SearchServiceClient searchService = new SearchServiceClient(

searchServiceName: ConfigurationManager.AppSettings["SearchServiceName"];,

credentials: new SearchCredentials(ConfigurationManager.AppSettings["SearchServiceAdminApiKey"]));

var indexerName = ConfigurationManager.AppSettings["indexerName"];

var exists = await searchService.Indexers.ExistsAsync(indexerName);

if (exists)

{

await searchService.Indexers.RunAsync(indexerName);

log.Info($"Indexer {indexerName} was executed at: {DateTime.Now}");

}

// call the next function to retrieve the deleted blobs

using (var client = new HttpClient())

{

await client.GetAsync("<AzureFunctionUri");

}

}

view rawrunSearchIndexerManually.cs hosted with

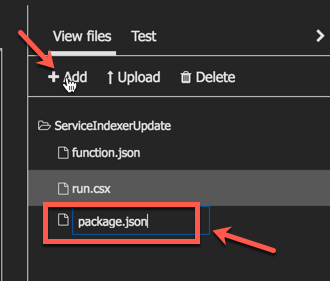

You’ll notice that this Function makes use of the Azure Search namespaces, which are not “natively” available to Azure Functions.

FYI – There’s a number of .NET APIs/namespaces available by default to Azure Functions as part of the runtime. These namespaces can be found here.

To make this namespace available to our Function, we need to add the appropriate NuGet package dependency. Add a packages.json file within your Function. In the portal, under the View Files tab, click on the +Add link and add a new file:

Open the file and add the necessary dependency to the Azure Search SDK as per the example below:

{

"frameworks": {

"net46":{

"dependencies": {

"Microsoft.Azure.Search": "3.0.4"

}

}

}

}

As soon as you save and close the file, Kudu (the super AppService orchestrator) will attempt to restore the designated NuGet packages. This should generate logs similar to the ones below:

2018-01-23T06:54:17 Welcome, you are now connected to log-streaming service.

2018-01-23T06:54:32.688 Function started (Id=5c37f45e-c995-4d76-b424-3722316e6338)

2018-01-23T06:54:32.688 Package references have been updated.

2018-01-23T06:56:17 No new trace in the past 1 min(s).

2018-01-23T06:57:17 No new trace in the past 2 min(s).

2018-01-23T06:57:16.700 Starting NuGet restore

2018-01-23T06:57:19.921 Restoring packages for D:\home\site\wwwroot\eventgriddemo\project.json...

2018-01-23T06:57:20.467 Restoring packages for D:\home\site\wwwroot\eventgriddemo\project.json...

// logs omitted for clarity

2018-01-23T06:57:23.278 Installing Newtonsoft.Json 9.0.1.

2018-01-23T06:57:23.309 Installing Microsoft.Rest.ClientRuntime 2.3.7.

2018-01-23T06:57:24.801 Installing Microsoft.Rest.ClientRuntime.Azure 3.3.6.

2018-01-23T06:57:25.792 Installing Microsoft.Spatial 7.2.0.

2018-01-23T06:57:27.039 Installing Microsoft.Azure.Search 3.0.4.

The Function code that runs the Search Indexer is shown below:

using System;

using System.Configuration;

using System.Net.Http;

public static async Task Run(TimerInfo myTimer, TraceWriter log)

{

SearchServiceClient searchService = new SearchServiceClient(

searchServiceName: ConfigurationManager.AppSettings["SearchServiceName"];,

credentials: new SearchCredentials(ConfigurationManager.AppSettings["SearchServiceAdminApiKey"]));

var indexerName = ConfigurationManager.AppSettings["indexerName"];

var exists = await searchService.Indexers.ExistsAsync(indexerName);

if (exists)

{

await searchService.Indexers.RunAsync(indexerName);

log.Info($"Indexer {indexerName} was executed at: {DateTime.Now}");

}

// call the next function to retrieve the deleted blobs

using (var client = new HttpClient())

{

await client.GetAsync("<AzureFunctionUri");

}

}

view rawrunSearchIndexerManually.cs hosted with

A FUNCTION TO RETRIEVE ALL SOFT-DELETED BLOBS

Once the indexer has run successfully, we can then go ahead and retrieve all the blobs that need to be deleted. The Function below makes use of Storage Queue as an output binding to populate a queue with the list of blobs that need to be deleted. This time I chose to use a NETStandard C# Function:

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Azure.WebJobs.Host;

using System.Threading.Tasks;

using System.Collections.Generic;

using Microsoft.WindowsAzure.Storage.Blob;

using Microsoft.WindowsAzure.Storage;

using System.Linq;

using System;

namespace SearchFunctions

{

public static class Function1

{

[FunctionName("RetrieveDeletedBlobs")]

public static async Task<ICollector<string>> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", Route = null)]HttpRequest req,

[Queue("blobs-to-delete", Connection = "StorageQueueConnectionString")] ICollector<string> outputQueueItems,

TraceWriter log)

{

log.Info("Adding blobs to the delete queue");

var deletedBlobUris = await ListAllDeletedBlobUris();

foreach (var blobUri in deletedBlobUris)

{

outputQueueItems.Add(blobUri);

}

return outputQueueItems;

}

private static async Task<List<string>> ListAllDeletedBlobUris()

{

var sa = CloudStorageAccount.Parse(Environment.GetEnvironmentVariable("StorageBlobConnectionString"));

var blobClient = sa.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("searchdata");

var results = await ListBlobsAsync(container);

var deletedBlobs = results.Cast<CloudBlockBlob>()

.Where(b => b.Metadata["IsDeleted"] == "false");

return deletedBlobs.Select(b => b.Uri.AbsoluteUri).ToList();

}

public static async Task<List<IListBlobItem>> ListBlobsAsync(CloudBlobContainer container)

{

BlobContinuationToken continuationToken = null;

List<IListBlobItem> results = new List<IListBlobItem>();

do

{

var response = await container.ListBlobsSegmentedAsync(

null,

false,

BlobListingDetails.Metadata,

null,

continuationToken,

null, null);

continuationToken = response.ContinuationToken;

results.AddRange(response.Results);

}

while (continuationToken != null);

return results;

}

}

}

view rawazfuncRetrieveDeletedBlobs.cs hosted with

For this Function to work, you’ll need to define the following Application Settings in your Function App:

"AzureWebJobsStorage": "<StorageConnectionString>",

"AzureWebJobsDashboard": "<StorageConnectionString>",

"StorageQueueConnectionString": "<StorageQueueConnectionString>",

"StorageBlobConnectionString": "<StorageBlobConnectionString>"

}

A FUNCTION TO DELETE THE BLOB DATA

Finally, we need a Function that gets triggered off the Azure Storage Queue and deletes the blob based on the queue message. The queue gets populated by the previous Function. By now you can see how powerful bindings are and how they make coding more trivial by removing much of the plumping. The code below shows how this Function is implemented:

using System;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Blob;

namespace SearchFunctions

{

public static class DeleteBlob

{

[FunctionName("DeleteBlob")]

public static async Task Run([QueueTrigger("blobs-to-delete", Connection = "StorageQueueConnectionString")]string myQueueItem,

TraceWriter log)

{

await DeleteBlobByUri(myQueueItem);

log.Info($"C# Queue trigger function processed: {myQueueItem}");

}

private static async Task DeleteBlobByUri(string blobUri)

{

var sa = CloudStorageAccount.Parse(Environment.GetEnvironmentVariable("StorageBlobConnectionString"));

var blobClient = sa.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("searchdata");

var blockBlob = new CloudBlockBlob(new Uri(blobUri), sa.Credentials);

await blockBlob.DeleteIfExistsAsync();

}

}

}

view rawazfuncDeleteBlobFromQueue.cs hosted with

SUMMARY

I hope this post did a good job in showcasing the flexibility and power of Azure Serverless services (i.e. Functions in this instance) and how we can use multiple, small components to create a larger, more complex solution.

About the author:

Christos Matskas, a developer, speaker, writer, Microsoft Premier Field Engineer (PFE) and geek. I currently live with my family in the UK. Learn more at: https://cmatskas.com/about/

Reference:

Matskas, C. (2018). Keeping your Azure Search Index up-to-date with Azure Functions. [online] Available at: https://cmatskas.com/keeping-your-azure-search-index-up-to-date-with-azure-functions/ [Accessed 20 Feb. 2018].

Using a SharePoint Online list as a Knowledge source via ACTIONS in Copilot AI Studio

Using a SharePoint Online list as a Knowledge source via ACTIONS in Copilot AI Studio