Microsoft 365 Copilot is an AI-powered tool that helps users to be more productive by providing personalized, relevant, and actionable responses. In this blog post, we’ll explore some of the key features that enable Copilot to deliver such accurate results, including the Semantic Index, the distinction between Tenant Boundary and Service Boundary, Grounding, and the data flow of a prompt. These features work together to provide a seamless and intuitive experience for the user, allowing them to get the most out of their Microsoft 365 experience.

Microsoft 365 Copilot is not only a powerful tool for productivity, but also a secure and compliant solution. With its advanced data protection and governance features, Copilot ensures that your data stays within the Microsoft 365 service boundary and is secured based on existing security, compliance, and privacy policies already deployed by your organization.

Let’s get into the first topic, the semantic index.

Semantic Index with Graph in Copilot for M365

The Semantic Index for Copilot is a feature that helps Copilot understand the context and deliver more accurate results. It builds upon keyword matching, personalization, and social matching capabilities within Microsoft 365 by creating vectorized indices to enable conceptual understanding. This means that instead of using traditional methods for querying based on exact matches or predefined criteria, the Semantic Index for Copilot finds the most similar or relevant data based on the semantic or contextual meaning.

Therefore, it works with Microsoft Graph to create a sophisticated map of all data and content in your organization.

Copilot for Microsoft 365 only surfaces organizational data to which individual users have at least view permissions. Prompts, responses, and data accessed through Microsoft Graph aren’t used to train foundation LLMs, including those used by Microsoft Copilot for Microsoft 365.

For example, if you ask Copilot to find sales numbers for Microsoft in FY23, the Semantic Index for Copilot will add adjacent words and phrases such as “revenue,” “financial results,” and “earnings report” into the search to widen the search area and provide the most relevant result. This is possible because the Semantic Index creates vectorized indices to enable conceptual understanding, where semantically similar data points are clustered together in the vector space. This allows Copilot to handle a much broader set of search queries beyond “exact match” and find the most similar or relevant data based on the semantic or contextual meaning.

Can data be excluded from the Semantic Index?

Yes, this is possible. There are situations in which it makes sense to exclude a SharePoint Online site from indexing by Microsoft Search and the semantic index at client level in order to protect confidential data such as payroll, human resources or financial information.

How it works: Exclude SPO sites

Tenant Boundary vs. M365 Service Boundary

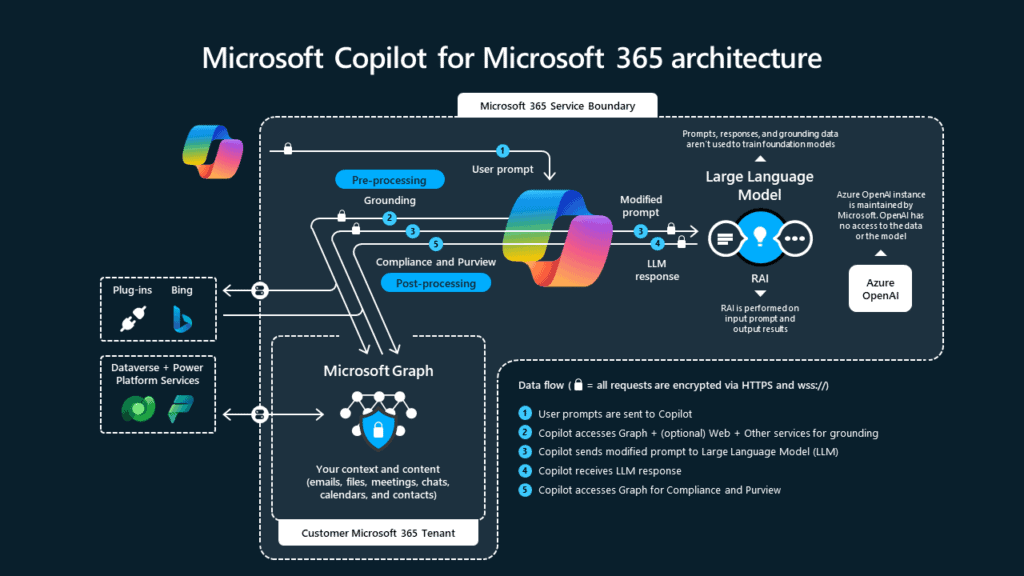

As described in the diagram above, there is a tenant boundary and a Microsoft 365 service boundary in the Copilot architecture. What are the differences?

M365 Service Boundary:

The Microsoft 365 Service Boundary defines the limits of the services and features offered by Microsoft 365. For customers in Europe, this means that the “Service Boundary” is the EU Data Boundary. The EU Data Boundary is a geographically defined boundary within which Microsoft has committed to storing and processing customer data for core commercial enterprise online services. Microsoft restricts the transfer of customer data outside the EU Data Boundary and provides transparency documentation describing the data that leaves the boundary. In combination, this means that customers within the EU Data Boundary can access the services and features offered by Microsoft 365 while their data is stored and processed within the EU Data Boundary.

Since 01.01.2024, Microsoft has expanded the EU Data Boundary to include all personal data, including pseudonymized personal data in system-generated logs, which may occur in automatically generated logs.

Links to the EU Data Boundary: PII Data ; Trust Center

Tenant Boundary:

As described in the previous chapter, tenants of European customers are located within the M365 Service Boundary, which is the EU Data Boundary. If you want to check exactly where your tenant is located, you can do so in the M365 Admin Center.

Data flow of a prompt

Let us now enter into the data flows of a prompt and clarify the following questions:

How exactly does the data flow work?

Can data or the prompt leave the M365 service boundary?

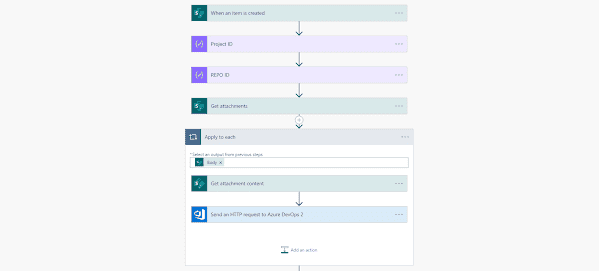

How exactly does the data flow work?

- When a user inputs a prompt in an app, such as Word or PowerPoint, Copilot receives it and processes it.

- Copilot pre-processes the input prompt through an approach called grounding. Grounding improves the specificity of the prompt by assigning extra context and content to the user’s prompt, drawing from information within the Microsoft Graph, such as emails, files, meetings, chats, and calendars. This helps Copilot provide answers that are relevant and actionable to the user’s specific task. REMEMBER: Copilot for Microsoft 365 only surfaces organizational data to which individual users have at least view permissions.

- Copilot then sends the prompt to the LLM (Which sits outside your Tenant but in the EU-Data-Boundary) for processing. The data packets are encrypted using HTTPS and then decrypted within the LLM and placed into a protected container called a TEE (Trusted Execution Environment). The data sent to the LLM is relevant to the prompt and is referred to as artifacts. These artifacts could include full emails or text, or text without filler words. The LLM fills in the filler words and pulls information from multiple sources to create a human-readable response. The data follows a process called Confidential Data Cleanroom (CDC), which means that any data being processed will be deleted from the processing space. You can manually request references by writing “Provide Cites or references” in the prompt.

- After Copilot receives a response from the LLM, it performs post-processing on the response. This post-processing includes additional grounding calls to Microsoft Graph, responsible AI checks, security, compliance, and privacy reviews, and command generation. This ensures that the response is relevant, accurate, and adheres to the user’s organization’s policies and regulations. Additionally, Copilot may also perform other checks, such as filtering offensive, adult, harmful, or malicious queries, and detecting personal, location, or time-related queries for special handling.

- The response from the LLM is returned to the app by Copilot, where the user can review and evaluate it.

Can data or the prompt leave the M365 service boundary?

When using Microsoft Copilot for Microsoft 365, data may leave the Microsoft 365 service boundary under the following circumstances:

Integration of Bing and web content:

Microsoft Copilot can access web content from Bing to provide relevant answers to user prompts through a process called “web grounding.” When enabled, Copilot generates an online search query based on the user’s prompt and relevant data in Microsoft 365. The query is sent to the Bing Search API to retrieve information from the web, which is then used to generate a comprehensive response. The user’s prompts and Copilot’s responses remain within the Microsoft 365 service boundary, while only the search query goes to the Bing Search API outside the service boundary. Web search queries may contain some confidential data, depending on the user’s prompt.

Plugins in Microsoft 365 Copilot:

To answer a user’s question, Microsoft Copilot can access other tools and services for Microsoft 365 experiences by using Microsoft Graph connectors or plugins. When plugins are enabled, Copilot checks if a specific plugin is needed to provide a suitable response to the user. If so, Copilot creates a search query on behalf of the user and sends it to the plugin. This query is based on the user’s input, Copilot’s interaction history, and the data accessible to the user in Microsoft 365. In this way, Copilot can provide the user with a comprehensive and relevant response. Since plugins are third-party tools, they determine how they handle data.

Conclusion

In summary, it is crucial to be aware of the data that Copilot can access and to have a clear understanding of the data within your organization. Ask yourself the questions is your data maybe overshared?

To safeguard your data, you can utilize Purview sensitivity labels or exclude sensitive information from the Semantic Index. Copilot adheres to the security and compliance measures established by your organization and does not use your data to train its LLM. Furthermore, all data remains within the EU Data Boundary, providing an additional layer of protection. By taking these factors into account, you can effectively utilize Copilot to enhance productivity while ensuring the security and privacy of your data.

Disclaimer:

Please note that this entire blog article pertains to customers in Europe with a tenant in the EU Data Boundary.

Please note that the information provided is not intended to be legal advice and should not be relied upon as such. In case of any doubts or concerns, it is recommended to verify the information directly with Microsoft.

This blog is part of Microsoft Copilot Week! Find more similar blogs on our Microsoft Copilot Landing page here.

About the author:

Reference:

Freudenberger, A. (2024) How does Copilot for Microsoft 365 work? Data flow of a prompt. Available at: How does Copilot for Microsoft 365 work? Data flow of a prompt. | LinkedIn [Accessed on 23/04/2024]