In this blog, we will explore how to use Azure AI Search in conjunction with Azure OpenAI, LlamaIndex, and TruLens to build a robust Retrieval-Augmented Generation (RAG) evaluation system. This tutorial is inspired by Dr. Andrew Ng’s DeepLearning.AI course “Building and Evaluating Advanced RAG Applications”with instructors Jerry Liu (Co-Founder of LlamaIndex) and Anupam Datta (Co-Founder of TruEra, recently acquired by Snowflake), which highlights the importance of RAG evaluation. We aim to guide customers on how to use these tools to assess RAG performance, with a specific focus on the different retrieval modes in Azure AI Search.

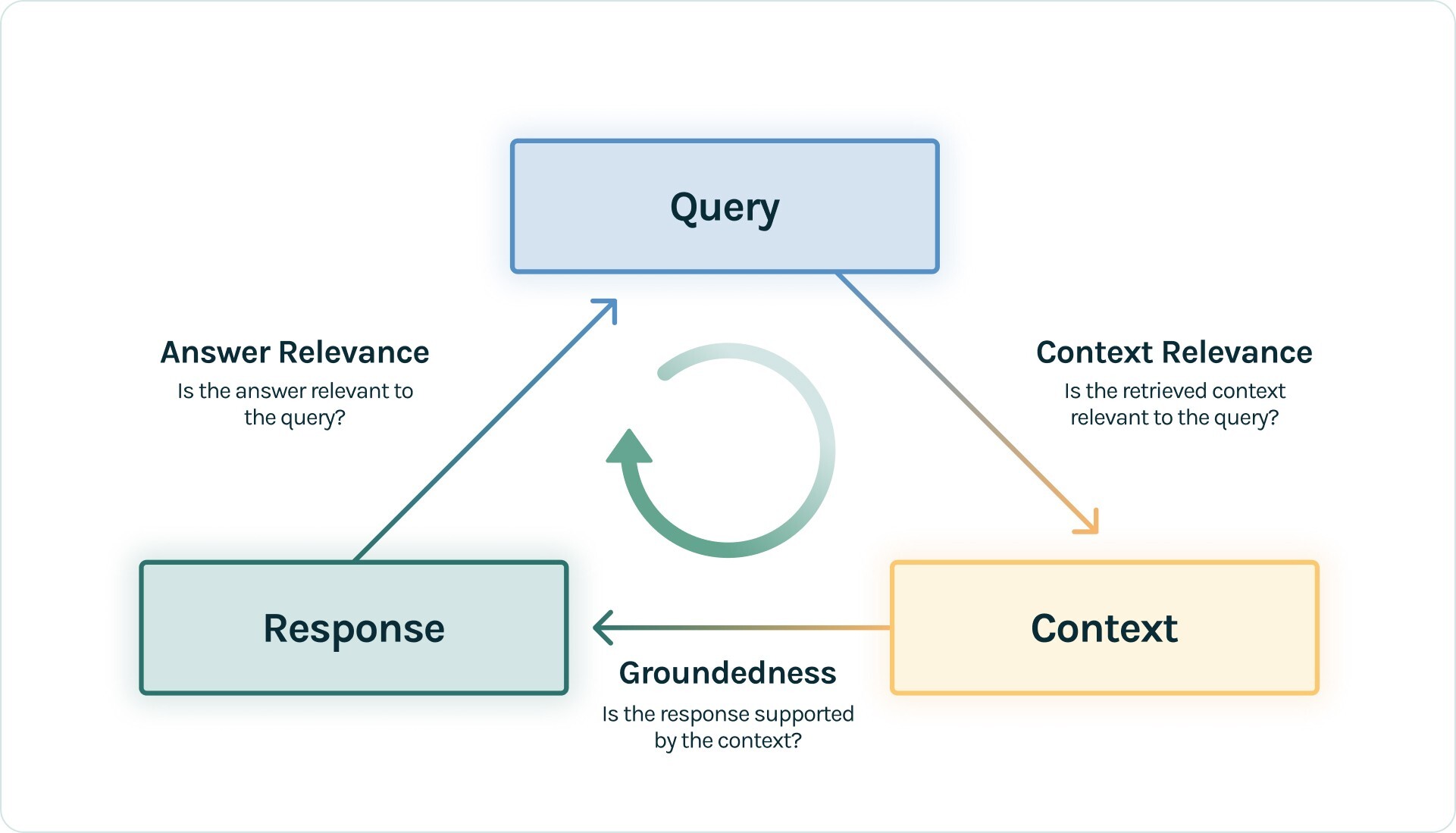

Introduction to RAG Triad by TrueLens

RAG has become the standard architecture for providing Large Language Models (LLMs) with context, reducing the occurrence of hallucinations. However, even RAG systems can suffer from hallucinations if the retrieval fails to provide sufficient or relevant context. TruLens’s RAG Triad evaluates RAG quality for both retrieval and generation along three key aspects:

- Context Relevance: Ensures that each piece of context is relevant to the input query.

- Groundedness: Verifies that the response is based on the retrieved context and not on hallucinations.

- Answer Relevance: Ensures the response accurately answers the original question.

By achieving satisfactory evaluations on these three aspects, we can ensure the correctness of our application and minimize hallucinations within the limits of its knowledge base.

Setting Up a Python Virtual Environment in Visual Studio Code

Before diving into the implementation, it’s crucial to set up your development environment:

- Open the Command Palette (Ctrl+Shift+P) in Visual Studio Code.

- Search for

Python: Create Environment. - Select

Venv. - Choose a Python interpreter (3.10 or later).

Next, install the required packages:COPYCOPY

!pip install llama-index

!pip install azure-identity

!pip install python-dotenv

!pip install trulens-eval

!pip install llama-index-vector-stores-azureaisearch

!pip install azure-search-documents --pre

Initial Setup

To begin, load your environment variables and initialize the necessary clients and models. This setup includes the Azure OpenAI and embedding models, and Azure Search clients.COPYCOPY

import os

from dotenv import load_dotenv

from azure.core.credentials import AzureKeyCredential

from azure.search.documents import SearchClient

from azure.search.documents.indexes import SearchIndexClient

from llama_index.core import SimpleDirectoryReader, StorageContext, VectorStoreIndex

from llama_index.embeddings.azure_openai import AzureOpenAIEmbedding

from llama_index.llms.azure_openai import AzureOpenAI

from llama_index.vector_stores.azureaisearch import AzureAISearchVectorStore, IndexManagement

# Load environment variables

load_dotenv()

# Environment Variables

AZURE_OPENAI_ENDPOINT = os.getenv("AZURE_OPENAI_ENDPOINT")

AZURE_OPENAI_API_KEY = os.getenv("AZURE_OPENAI_API_KEY")

AZURE_OPENAI_CHAT_COMPLETION_DEPLOYED_MODEL_NAME = os.getenv("AZURE_OPENAI_CHAT_COMPLETION_DEPLOYED_MODEL_NAME") # GPT-3.5-turbo

AZURE_OPENAI_EMBEDDING_DEPLOYED_MODEL_NAME = os.getenv("AZURE_OPENAI_EMBEDDING_DEPLOYED_MODEL_NAME") # text-embedding-ada-002

SEARCH_SERVICE_ENDPOINT = os.getenv("AZURE_SEARCH_SERVICE_ENDPOINT")

SEARCH_SERVICE_API_KEY = os.getenv("AZURE_SEARCH_ADMIN_KEY")

INDEX_NAME = "contoso-hr-docs"

# Initialize Azure OpenAI and embedding models

llm = AzureOpenAI(

model=AZURE_OPENAI_CHAT_COMPLETION_DEPLOYED_MODEL_NAME,

deployment_name=AZURE_OPENAI_CHAT_COMPLETION_DEPLOYED_MODEL_NAME,

api_key=AZURE_OPENAI_API_KEY,

azure_endpoint=AZURE_OPENAI_ENDPOINT,

api_version="2024-02-01"

)

embed_model = AzureOpenAIEmbedding(

model=AZURE_OPENAI_EMBEDDING_DEPLOYED_MODEL_NAME,

deployment_name=AZURE_OPENAI_EMBEDDING_DEPLOYED_MODEL_NAME,

api_key=AZURE_OPENAI_API_KEY,

azure_endpoint=AZURE_OPENAI_ENDPOINT,

api_version="2024-02-01"

)

# Initialize search clients

credential = AzureKeyCredential(SEARCH_SERVICE_API_KEY)

index_client = SearchIndexClient(endpoint=SEARCH_SERVICE_ENDPOINT, credential=credential)

search_client = SearchClient(endpoint=SEARCH_SERVICE_ENDPOINT, index_name=INDEX_NAME, credential=credential)

Note: You aren’t limited to using Azure OpenAI or OpenAI here, you can use whatever embedding model and language model that is supported in LlamaIndex. See Models – LlamaIndex

Why this setup is important:

- Environment Variables: Securely managing credentials and endpoints.

- Model Initialization: Ensuring that both Azure OpenAI models are set up for further use.

- Search Clients: Establishing connections to Azure AI Search services to facilitate indexing and searching operations.

Vector Store Initialization

Set up the vector store using Azure AI Search. This step configures the settings for storing and managing your data vectors.COPYCOPY

from llama_index.core.settings import Settings

Settings.llm = llm

Settings.embed_model = embed_model

# Initialize the vector store

vector_store = AzureAISearchVectorStore(

search_or_index_client=index_client,

index_name=INDEX_NAME,

index_management=IndexManagement.CREATE_IF_NOT_EXISTS,

id_field_key="id",

chunk_field_key="text",

embedding_field_key="embedding",

embedding_dimensionality=1536,

metadata_string_field_key="metadata",

doc_id_field_key="doc_id",

language_analyzer="en.lucene",

vector_algorithm_type="exhaustiveKnn",

)

Personally, I love using the Azure AI Search LlamaIndex vector store integration for creating indexes as it’s designed to rapidly prototype with different strategies for RAG.

Load Documents and Create Vector Store Index

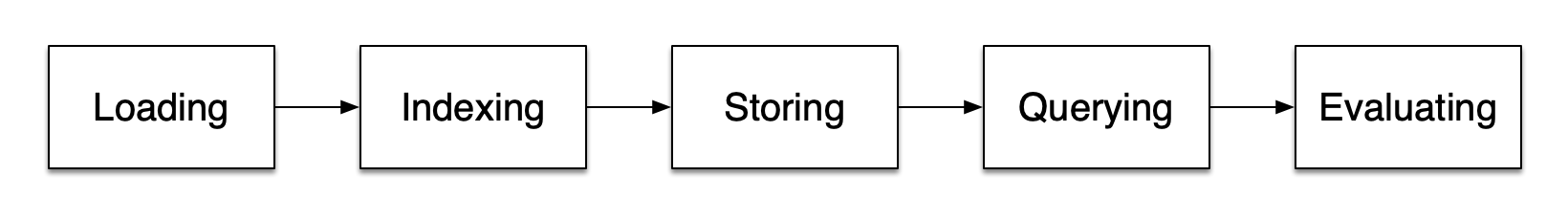

Load documents from the specified directory and create a vector store index. This step is essential for indexing your data and making it searchable.COPYCOPY

import nest_asyncio

from llama_index.core.node_parser import TokenTextSplitter

nest_asyncio.apply()

# Configure text splitter

text_splitter = TokenTextSplitter(separator=" ", chunk_size=512, chunk_overlap=128)

# Load documents

documents = SimpleDirectoryReader("data/pdf").load_data()

storage_context = StorageContext.from_defaults(vector_store=vector_store)

# Create index

index = VectorStoreIndex.from_documents(documents, transformations=[text_splitter], storage_context=storage_context)

Experiment with different loading and splitting strategies in LlamaIndex here: Loading Data – LlamaIndex

Set Retrievers and Query Engines

In this step, we’ll define 3 different query engines so we can evaluate which retrieval mode works the best in Azure AI Search.COPYCOPY

from llama_index.core.query_engine import RetrieverQueryEngine

from llama_index.core.vector_stores.types import VectorStoreQueryMode

# Query execution

query = "Does my health plan cover scuba diving?"

# Initialize retrievers and query engines

keyword_retriever = index.as_retriever(vector_store_query_mode=VectorStoreQueryMode.SPARSE, similarity_top_k=10)

hybrid_retriever = index.as_retriever(vector_store_query_mode=VectorStoreQueryMode.HYBRID, similarity_top_k=10)

semantic_hybrid_retriever = index.as_retriever(vector_store_query_mode=VectorStoreQueryMode.SEMANTIC_HYBRID, similarity_top_k=10)

keyword_query_engine = RetrieverQueryEngine(retriever=keyword_retriever)

hybrid_query_engine = RetrieverQueryEngine(retriever=hybrid_retriever)

semantic_hybrid_query_engine = RetrieverQueryEngine(retriever=semantic_hybrid_retriever)

# Query and print responses

for engine_name, engine in zip(['Keyword', 'Hybrid', 'Semantic Hybrid'],

[keyword_query_engine, hybrid_query_engine, semantic_hybrid_query_engine]):

response = engine.query(query)

print(f"{engine_name} Response:", response)

print(f"{engine_name} Source Nodes:")

for node in response.source_nodes:

print(node)

print("\n")

COPYCOPY

Keyword Response: Your health plan covers scuba diving lessons as part of the benefits program.

Keyword Source Nodes:

Node ID: 7e5b2642-4b79-4263-9bb7-210e04f49c2d

Text: Overview Introducing PerksPlus - the ultimate benefits program

designed to support the health and wellness of employees. With

PerksPlus, employees have the opportunity to expense up to $1000 for

fitness -related programs, making it easier and more affordable to

main tain a healthy lifestyle. PerksPlus is not only designed to

support employ...

Score: 7.844

Hybrid Response:(omitted for brevity)

Hybird Source Nodes: (omitted for brevity)

Semantic Hybrid Response: (omitted for brevity)

Semantic Hybrid Source Nodes: (omitted for brevity)

According to the Azure AI Search Blog Post, Hybrid Search + Semantic Ranker (cross-encoder model), performs the best retrieval. Let’s validate that on our own dataset with real customer queries!

So far, we have used LlamaIndex to create our Azure AI Search index which handled the loading, chunking, and vectorization with only a few lines of code. Let’s take a look in the Azure Portal to verify the properties of my index.

Note that the Vector Index Size is 0 Bytes because we are using Exhaustive KNN as a vector algorithm, so the vectors are stored only on disk. See Vector search – Azure AI Search | Microsoft Learn for more details on different vector indexing algorithms.

Everything looks good! Now, we can being running some RAG Evaluations.

Evaluate RAG using TruLens

Setup and run some evaluations using TruLens to assess the performance of our RAG system.COPYCOPY

from trulens_eval import Tru, Feedback, TruLlama, AzureOpenAI

import numpy as np

from llama_index.core.query_engine import RetrieverQueryEngine

from llama_index.core.vector_stores.types import VectorStoreQueryMode

from trulens_eval.app import App

# Initialize TruLens

tru = Tru()

tru.reset_database()

provider = AzureOpenAI(

deployment_name=AZURE_OPENAI_CHAT_COMPLETION_DEPLOYED_MODEL_NAME,

api_key=AZURE_OPENAI_API_KEY,

azure_endpoint=AZURE_OPENAI_ENDPOINT,

api_version="2024-02-01"

)

context = App.select_context(query_engine)

# Define feedback functions

f_groundedness = Feedback(provider.groundedness_measure_with_cot_reasons).on(context.collect()).on_output()

f_answer_relevance = Feedback(provider.relevance).on_input_output()

f_context_relevance = Feedback(provider.context_relevance_with_cot_reasons).on_input().on(context).aggregate(np.mean)

feedbacks = [f_context_relevance, f_answer_relevance, f_groundedness]

# Function to get TruLens recorder

def get_prebuilt_trulens_recorder(query_engine, app_id):

tru_recorder = TruLlama(query_engine, app_id=app_id, feedbacks=feedbacks)

return tru_recorder

# Execute and record queries

def execute_and_record_queries(query_engine, app_id, eval_questions):

tru_recorder = get_prebuilt_trulens_recorder(query_engine, app_id=app_id)

with tru_recorder as recording:

for question in eval_questions:

query_engine.query(question)

# Load evaluation questions

eval_questions = []

with open("eval/eval_contoso_hr.txt", "r") as file:

for line in file:

item = line.strip()

eval_questions.append(item)

# Execute and record queries for all query engines

execute_and_record_queries(keyword_query_engine, "baseline", eval_questions)

execute_and_record_queries(hybrid_query_engine, "hybrid", eval_questions)

execute_and_record_queries(semantic_hybrid_query_engine, "semantic_hybrid", eval_questions)

You can find more information about the feedback functions here:

Query Types and Definitions

This section provides definitions and examples for various types of queries used in our evaluation dataset, eval_contoso_hr.txt.

| Query Type | Explanation | Example |

| Concept Seeking queries | Abstract questions that require multiple sentences to answer. | Why is PerksPlus considered a comprehensive health and wellness program? |

| Exact snippet search | Longer queries that are exact sub-strings from the original paragraph. | “expense up to $1000 for fitness-related programs” |

| Web search-like queries | Shortened queries similar to those commonly entered into a search engine. | PerksPlus fitness reimbursement program |

| Low query/doc term overlap | Queries where the answer uses different words and phrases from the question, which can be challenging for a retrieval engine to find. | Comprehensive wellness program for employees |

| Fact seeking queries | Queries with a single, clear answer. | How much can employees expense under PerksPlus? |

| Keyword queries | Short queries that consist of only the important identifier words. | Mental health coverage Northwind |

| Queries with misspellings | Queries with typos, transpositions, and common misspellings introduced. | Mentl helth coverage Northwind |

| Long queries | Queries longer than 20 tokens. | Explain how PerksPlus supports both physical and mental health of employees, including examples of activities and lessons covered under the program. |

| Medium queries | Queries between 5 and 20 tokens long. | List three fitness activities covered by PerksPlus. |

| Short queries | Queries shorter than 5 tokens. | Plan exclusions |

Note: For the evaluation dataset, I leveraged ChatGPT to procure a list of 100 questions (10 from each category) covering the above categories to ensure a diverse pool. In practice, you should obtain a representative sample of real-production queries users perform. For more information on query categorization, refer to the Azure AI Search blog post.

Get Leaderboard

Let’s now print a leaderboard to see the best performing retrieval mode for this RAG Evaluation.COPYCOPY

tru.get_leaderboard(app_ids=["baseline", "hybrid", "semantic_hybrid"])

| groundedness_measure_with_cot_reasons | relevance | context_relevance_with_cot_reasons | |

| semantic_hybrid | 0.718439 | 0.969 | 0.686005 |

| hybrid | 0.641838 | 0.959 | 0.675129 |

| baseline | 0.600933 | 0.953 | 0.671587 |

Just as expected, Hybrid Search with Semantic Ranker was the highest performing retrieval mode across all 3 of our evaluation metrics. Let’s dive a bit deeper into the evaluation results now!

I elected to use GPT-35-Turbo model here as my langauage model to optimize for speed and cost. If you want to focus on accuracy, I’d recommend using a newer model such as gpt-4o.

Visualizing Results with TruLens Dashboard

To gain deeper insights and visualize the evaluation metrics, you should run the TruLens dashboard. The dashboard provides a comprehensive view of the performance of different query engines and helps in identifying areas of improvement.

Running the Dashboard

Execute the following command to start the dashboard:COPYCOPY

tru.run_dashboard()

Dashboard Results Explanation

Below is a screenshot of the TruLens dashboard showing the performance metrics of different query engines using in Azure AI Search: baseline, hybrid, and semantic_hybrid.

The dashboard provides the following key metrics for each query engine:

- Records: The total number of queries evaluated.

- Average Latency (Seconds): The average time taken to process each query.

- Total Cost (USD): The total cost incurred for processing the queries.

- Total Tokens: The total number of tokens processed.

- Context Relevance (with COT reasons): This metric assesses the relevance of the retrieved context to the input queries. A higher value signifies better relevance.

- Relevance: This metric measures how relevant the generated answers are to the questions asked. A higher value signifies higher relevance.

- Groundedness Measure (with COT reasons): This metric evaluates the groundedness of the answers, ensuring that the responses are based on the retrieved context and not hallucinated. A higher value signifies better groundedness.

Deep Dive into semantic_hybrid User Inputs and Responses

In this section, we will take a closer look at the semantic_hybrid evaluation results. We’ll analyze the user inputs, responses, and specific queries that resulted in a low context relevance score.

Evaluation Records

The table below shows the records of user inputs, responses, and the corresponding relevance, groundedness, and context relevance scores. Notice that while most responses have high relevance scores, some have lower context relevance scores.

Trace Details for a Specific Query

Here we delve into the trace details of a specific query that resulted in a low context relevance score. This helps us understand the step-by-step processing of the query and where it might have fallen short.

Feedback Results for Low Context Relevance Scores

Example Query with Low Context Relevance Score

User Input

“Discuss the importance of performance reviews at Contoso Electronics and how they contribute to employee development, including the process and feedback mechanisms used.”

Output

“Performance reviews at Contoso Electronics play a crucial role in employee development by providing a platform for discussing past performance, setting goals for the upcoming year, and offering constructive feedback. The process involves a two-way dialogue between managers and employees, encouraging honesty and openness. This allows employees to discuss their successes and challenges, while also receiving positive and constructive feedback aimed at helping them grow in their roles. Additionally, the written summary provided during the review session includes a rating of the employee’s performance, feedback, and goals and objectives for the upcoming year, ensuring clarity and alignment on expectations.”

Context and Analysis

1st Chunk/Node

Text:

7. Accountability: We take responsibility for our actions and hold ourselves and others accountable for their performance.

Community: We are committed to making a positive impact in the communities in which we work and live.

Performance ReviewsPerformance Reviews at Contoso Electronics

At Contoso Electronics, we strive to ensure our employees are getting the feedback they need to continue growing and developing in their roles. We understand that performance reviews are a key part of this process and it is important to us that they are conducted in an effective and efficient manner.

Performance reviews are conducted annually and are an important part of your career development. During the review, your supervisor will discuss your performance over the past year and provide feedback on areas for improvement. They will also provide you with an opportunity to discuss your goals and objectives for the upcoming year.

Performance reviews are a two-way dialogue between managers and employees. We encourage all employees to be honest and open during the review process, as it is an important opportunity to discuss successes and challenges in the workplace.

We aim to provide positive and constructive feedback during performance reviews. This feedback should be used as an opportunity to help employees develop and grow in their roles.

Employees will receive a written summary of their performance review which will be discussed during the review session. This written summary will include a rating of the employee’s performance, feedback, and goals and objectives for the upcoming year.

We understand that performance reviews can be a stressful process. We are committed to making sure that all employees feel supported and empowered during the process. We encourage all employees to reach out to their managers with any questions or concerns they may have.

We look forward to conducting performance reviews with all our employees. They are an important part of our commitment to helping our employees grow and develop in their roles.

Result: 0.80

Reason:

- Criteria: The context provides detailed information about the importance of performance reviews at Contoso Electronics, the process of conducting the reviews, the feedback mechanisms used, and the company’s commitment to employee development.

- Supporting Evidence: The context discusses the importance of performance reviews at Contoso Electronics, emphasizing their role in employee growth and development. It outlines the process of the reviews, including the annual frequency, the two-way dialogue between managers and employees, and the provision of written summaries with feedback and goals. Additionally, it addresses the feedback mechanisms used, highlighting the focus on positive and constructive feedback to help employees develop and grow in their roles. The context also emphasizes the company’s commitment to supporting and empowering employees during the review process. Overall, the context provides comprehensive information relevant to the importance of performance reviews, the process, feedback mechanisms, and employee development at Contoso Electronics.

2nd Chunk/Node

Text:

• Manage performance review process and identify areas for improvement

• Provide guidance and support to managers on disciplinary action

• Maintain employee records and manage payrollQUALIFICATIONS:

• Bachelor’s degree in Human Resources, Business Administration, or related field

• At least 8 years of experience in Human Resources, including at least 5 years in a managerial role

• Knowledgeable in Human Resources principles, theories, and practices

• Excellent communication and interpersonal skills

• Ability to lead, motivate, and develop a high-performing HR team

• Strong analytical and problem-solving skills

• Ability to handle sensitive information with discretion

• Proficient in Microsoft Office SuiteDirector of Research and Development

Job Title: Director of Research and Development, Contoso Electronics

Position Summary:

The Director of Research and Development is a critical leadership role in Contoso Electronics. This position is responsible for leading the research, development and innovation of our products and services. This role will be responsible for identifying, developing, and executing initiatives to drive innovation, production, and operational excellence. The Director of Research and Development will also be responsible for leading a team of professionals in the research, development and deployment of both existing and new products and services.

Responsibilities:

• Lead the research, development, and innovation of our products and services

• Identify and develop new initiatives to drive innovation, production, and operational excellence

• Develop and implement strategies for rapid and cost effective product and service development

• Lead a team of professionals in research, development and deployment of both existing and new products and services

• Manage external relationships with suppliers, vendors, and partners

• Stay abreast of industry trends and best practices in product and service development

• Monitor product and service performance and suggest/implement improvements

• Manage the budget and ensure cost efficiency

Result: 0.20

Reason:

- Criteria: The context provided does not discuss the importance of performance reviews at Contoso Electronics or how they contribute to employee development, including the process and feedback mechanisms used.

- Supporting Evidence: The context mainly focuses on the responsibilities and qualifications of the Director of Research and Development at Contoso Electronics. It does not provide any information about the importance of performance reviews, their contribution to employee development, or the process and feedback mechanisms used at the company. Therefore, the relevance to the given question is minimal.

Conclusion

Through detailed analysis of the metrics and trace details, we’ve identified potential areas for improvement in both the retrieval and response generation processes. This in-depth examination enables us to enhance the context relevance and overall performance of the semantic_hybrid retrieval mode.

To further improve these evaluation metrics, we can experiment with advanced RAG strategies such as:

- Adjusting the Chunk Size

- Modifying the Overlap Window

- Implementing a Sentence Window Retrieval

- Utilizing an Auto-Merging Retrieval

- Applying Query Rewriting techniques

- Experimenting with Different Embedding Models

- Testing Different Large Language Models (LLMs)

By following these steps and using the tools provided, you can effectively evaluate and optimize your RAG system, ensuring high-quality, contextually relevant responses that minimize hallucinations.

References

- azure-ai-search-python-playground/azure-ai-search-rag-eval-trulens.ipynb at main · farzad528/azure-ai-search-python-playground (github.com)

- Azure AI Search – LlamaIndex

LlamaIndex Quickstart –

TruLens

- Azure AI Search: Outperforming vector search with hybrid retrieval and ranking capabilities – Microsoft Community Hub

This blog is part of Microsoft Azure Week! Find more similar blogs on our Microsoft Azure Landing page here.

About the author:

My mission is to redefine the potential of Search & AI, empowering individuals to create innovative and transformative digital experiences.

Reference:

Sunavala, F. (2024) Evaluating Azure AI Search with LlamaIndex and TruLens. Available at: Evaluating RAG with Azure AI Search, LlamaIndex, and TruLens (hashnode.dev) [Accessed on 24/06/2024]